Three Tier Architecture for Web Applications on AWS

In this post, I will be writing about a Three-Tier Architecture for Web Applications on Amazon Web Services (AWS). Following the best practices recommended by AWS Well-Architected Framework, the architecture is designed to provide availability, security, performance, reliability and cost optimization.

This post assumes that the reader has good understanding of AWS Services such as Virtual Private Cloud (VPC), Public and Private subnets, EC2 instances, Elastic Load Balancer, Auto Scaling group and AWS Route 53.

First I’ll discuss some of the important points in setting up a three tier application in AWS with a proposed architecture and then briefly touch on how it achieves the availability, scalability, security, performance and reliability targets.

Before getting into details, let’s have a quick look at a classic three tier architecture. It is a client-server architecture pattern which consists of three layers i.e. user interface (presentation), business logic and database storage layers. The goal of this architecture is to modularize the application so that each module can be managed independently of each other. The de-coupling between the tiers help the teams to focus on specific tiers and make changes as quickly as possible. It brings ease of maintenance and also helps to quickly recover from an unexpected failure by focusing only on the faulty module.

It also strengthens the overall security of your application by exposing the web servers to the internet traffic while the application servers with business logic are isolated and can only be accessed by the web servers internally. Similarly the data persistence layer is also separated and can only be accessed by the application servers.

Now let’s discuss what could be a three tier architecture in AWS. Below are some of the important points:

- Setting up a VPC with public and private subnets for multiple Availability Zones

- Setting up Load Balancers for Web and Application servers

- Provision EC2 instances within Auto Scaling groups

- Setting up the Database tier

- Setup DNS service with AWS Route 53

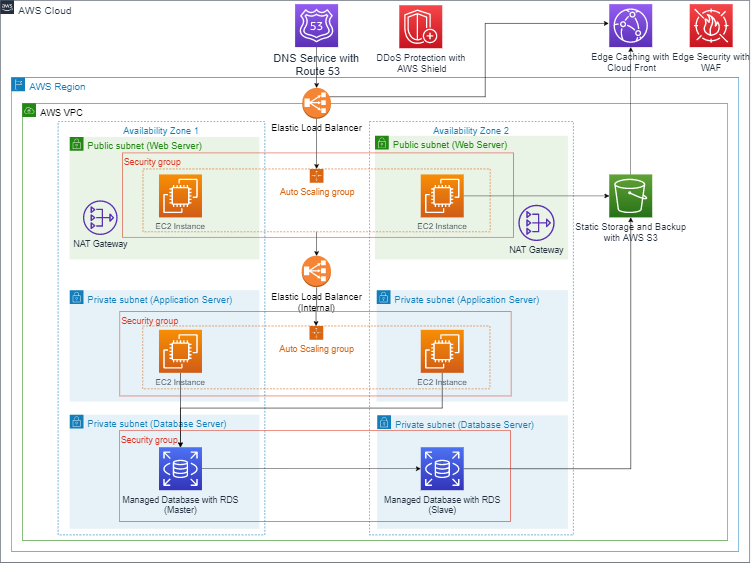

Proposed Architecture

Setting up a VPC with public and private subnets for multiple Availability Zones

The first and the most important thing is to create a custom Virtual Private Cloud (VPC). Amazon VPC creates a logical section in the cloud where you can provision AWS resources for your application. You can easily manage all the network configurations including private IPs, setting up public and private subnets and network gateways. This way you decide which AWS resources should be placed in a public-facing subnet and which should be private with no external access.

For a multi-tier architecture, your web servers are placed in a public subnet while the application and database servers are provisioned in private subnets (not publicly accessible). It is important to ensure that the public and private subnets can communicate to each other. NAT Gateways are used to provide internet access to the resources in the private subnet i.e. for installing patches etc.

You can manage access and security restrictions using security groups and network access control lists. AWS VPC gives you fine control to manage inbound and outbound traffic rules.

I repeat, it is the most important thing to plan and secure your application before running any production loads.

Setting up Load Balancers for Web and Application servers

As the web and application servers are spread across multiple availability zones, the system needs a load balancer to distribute the incoming traffic. The Elastic load balancer service offers high availability and fault tolerance to your architecture. It makes sure that only healthy instances in your infrastructure receive traffic across different availability zones.

In the proposed architecture shown above, there are two elastic load balancers. The first one receives internet traffic and route it to the web servers while the second load balancer is used as an internal one that cannot be accessed by the external traffic and only route the requests from the web servers to the application servers. The internal load balancers serve the purpose of an added layer of security between the external traffic and the applications servers.

Provision EC2 instances within Auto Scaling groups

The next step is to provision the EC2 instances within the public and private subnets. As discussed above, the instances in the public subnet are the web servers while the ones in the private subnet are application servers. It is an important part of this architecture to separate the user interface layer with the business logic layer so that the servers are not overloaded by handling different type of requests at a time.

The Auto Scaling groups enable the application to replace instances based on their health checks and also scale automatically in case it is overloaded by requests. This way, it maintains the minimum amount of instances and scales whenever required. The automatic increase and decrease in the number of instances can be set by using scaling policies.

Setting up the Database tier

The next step is to setup the persistence layer. This is the third tier of this architecture and it can only be accessed by the second tier in which the application servers reside. You can choose database of your choice, I used AWS Relational Database Service (RDS) instances. It also provides easy to set up, operate and scale a relational database in the cloud. RDS is highly available and secure. It also offers use-case specific instances e.g. performance optimized, high I/O or memory intensive workloads.

Setup DNS service with AWS Route 53

In order to receive the internet traffic and route it to the web servers, the architecture uses AWS Route 53 as a DNS service. It’s a very simple, secure, scalable and highly available service that routes end user traffic to the internet applications based on multiple criteria i.e. latency, geolocation etc. It also provides the option to configure multiple routing policies at one point of time.

Based on the diversity of your end-user traffic, you can also use the Cloud Front which is a Content Delivery network service to increase the performance of your web application by caching the most requested content in a nearby Edge Location.

In the last part of this post, let’s discuss how the proposed architecture achieves availability, security, performance, reliability and cost optimization.

1. Availability:

The system is spread across multiple Availability Zones, which ensures the availability of services to the users. In case, one availability zone is not accessible or has failures, the other availability zone can still be used to serve the traffic. If a running instance has failed and stopped working due to an unknown reason, the Elastic load balancer will remove the failed instance from its instance pool and stop sending traffic to it. In the meantime, the Auto Scaling group will create an exact replica of the failed instance and make it up and running again. This makes the system highly available and fault tolerant.

2. Security:

A multi-tier architecture increases the overall security of the application. As discussed above, the web, application and database servers are placed in separate tiers and only the web servers are exposed to the incoming internet traffic. The application and database tiers are isolated from external incoming traffic and can be accessed by the web servers through internal network, which makes the architecture more secure.

Furthermore, separate security groups are in place for each subnet. They provide a stateful, host-level firewall for both web, application and database servers. Additionally, AWS Shield safeguards the infrastructure against the most common network and transport layer DDoS attacks automatically.

3. Performance:

The architecture uses Amazon Cloud Front Edge servers along with Route 53, which provide the additional layer of network infrastructure to significantly increase performance.

Cloud Front Edge caches high-volume content and decrease the latency to the customers. This way the content is served to its customers in less time and DNS queries are resolved from locations that typically are closer to the users than the EC2 origin servers. This reduces the load on the EC2 servers.

4. Reliability:

A highly reliable system provides fault tolerance and can recover from infrastructure or service disruptions. It dynamically acquire computing resources to meet the increasing demand. In case of failures, the load balancers stop routing traffic to the un-healthy instances and the auto recovery feature of EC2 instances provides enough flexibility to keep mission-critical applications afloat when system failures occur.

5. Cost Optimization:

For shorter and longer term plans, the architecture is flexible to cater the needs of future growth. It provides you the ability to scale up and down as your traffic changes means you can pay only for what you need. Running infrastructure and services on AWS incur 70% less costs than the on premise infrastructure. To save the costs further, it is advised to purchase ‘Reserved’ EC2 instances which is the best option to use EC2 Instances for longer periods of time.

One of the design principles for cost optimization is to use managed services to reduce cost of ownership i.e. operational overheads for maintaining servers. Amazon RDS is a managed service and this is one of the reasons it is used in the proposed architecture.

Stay tuned for future posts about Architecting in Cloud!